Understanding the Inner Workings of Explainable AI (AXI)

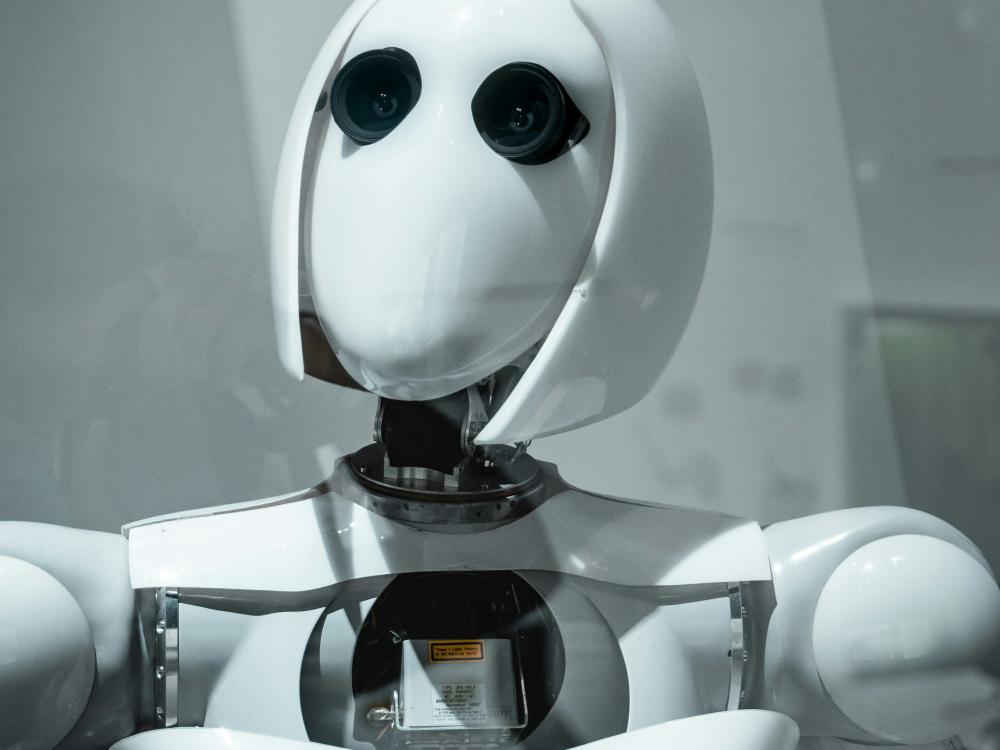

We are constantly striving to unlock the full potential of artificial intelligence. One area that has gained significant attention and importance in recent years is explainable AI (AXI). In this article, we will delve into the intricacies of AXI and explore how it works.

The Need for Explainable AI

In today's rapidly evolving technological landscape, AI systems are increasingly being integrated into various industries and sectors. However, as these systems become more complex and autonomous, understanding their decision-making processes becomes crucial. The lack of transparency in traditional machine learning models hinders our ability to comprehend why certain decisions are made. This is where explainable AI comes into play.

The Fundamentals of AXI

Explainable AI refers to the development of machine learning models that not only provide accurate predictions but also offer insights into how those predictions are derived. It aims to make the decision-making process more transparent and understandable for humans, fostering trust and enabling effective collaboration between humans and AI systems.

Interpretability: One key aspect of AXI is interpretability, which involves providing human-understandable explanations for the model's outputs. This can be achieved through techniques such as feature importance analysis, rule extraction, or generating natural language explanations.

Causal Reasoning: Another important component of AXI is causal reasoning. By identifying cause-and-effect relationships within a model, we can gain a deeper understanding of why specific predictions are made. This allows us to analyze the impact of different features on the model's output.

Evaluating Uncertainty: AXI also focuses on quantifying and communicating uncertainty. AI models often encounter situations where the outcome is uncertain or ambiguous. By incorporating uncertainty estimation techniques, we can provide users with a measure of confidence in the model's predictions.

Building Explainable AI Models

Developing explainable AI models requires a combination of careful design choices and advanced techniques. Here are some key considerations:

Data Preprocessing: Properly cleaning and preprocessing data is essential to ensure accurate and meaningful explanations. This includes handling missing values, addressing outliers, and normalizing features.

Model Selection: Different machine learning algorithms have varying levels of interpretability. When building AXI models, it is important to choose algorithms that offer transparency and explainability, such as decision trees or linear models.

Feature Engineering: Crafting informative features can greatly enhance the interpretability of the model. Feature engineering involves selecting relevant input variables, transforming them appropriately, and creating new features if needed.

Post-hoc Explanation Techniques: Post-hoc explanation methods can be applied to existing black-box models to make them more interpretable. These techniques involve generating explanations after the model has made its predictions using methods like LIME (Local Interpretable Model-Agnostic Explanations) or SHAP (SHapley Additive exPlanations).

The Benefits of Explainable AI

The adoption of explainable AI brings several advantages across various domains:

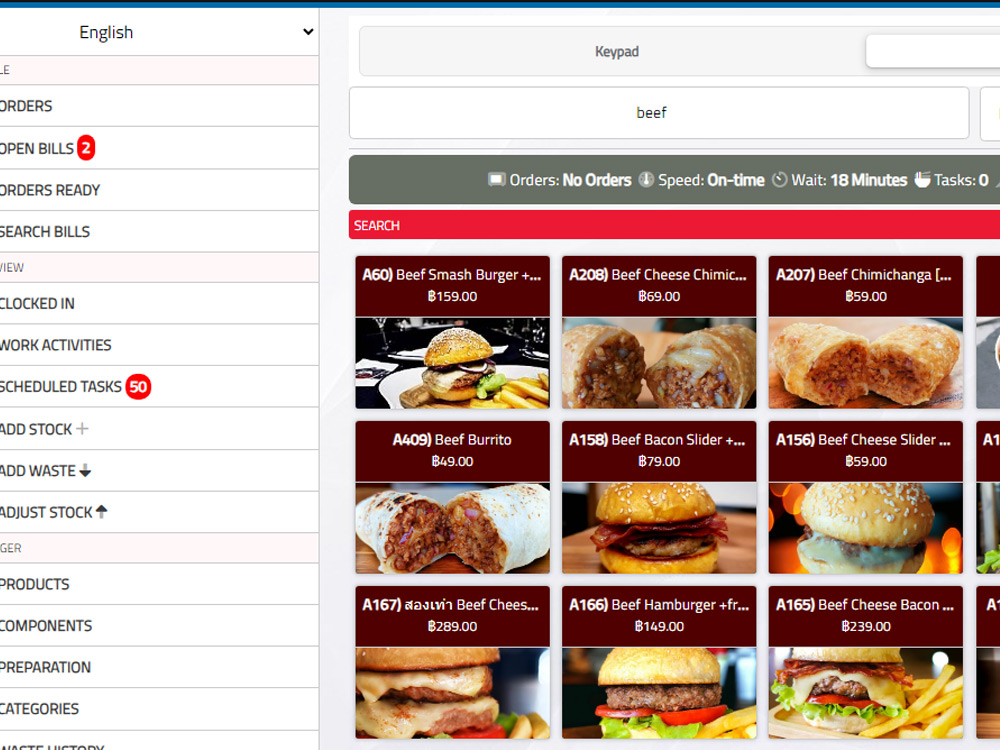

Trustworthiness: By understanding why an AI system makes certain decisions, users can develop trust in the technology and its recommendations.

Ethical Considerations: AXI allows us to identify potential biases or discriminatory patterns within the model, ensuring fairness and reducing the risk of unintended consequences.

Regulatory Compliance: In sectors with strict regulations, explainable AI provides a means to satisfy compliance requirements by enabling auditors and regulators to understand and validate the decision-making process.

Improved Collaboration: Explainable AI promotes collaboration between humans and machines. By providing understandable explanations, individuals can work alongside AI systems more effectively, leveraging their strengths while mitigating their limitations.

Explainable AI plays a crucial role in bridging the gap between complex machine learning models and human understanding. By incorporating interpretability, causal reasoning, and uncertainty evaluation techniques, we can unlock the true potential of AI while ensuring transparency, trustworthiness, and ethical use. it is our responsibility to continue pushing the boundaries of AXI and harnessing its power for positive societal impact.